In our last post, we explained why traditional approaches to protecting energy data neither provide enough access to energy data to meet our decarbonization goals nor adequately protect customer data--and how differential privacy is different. In this post, we explain in more detail how differential privacy works.

Keeping information private can be harder to achieve than you might expect.

Your friends and family know that you have an allergy to nuts. But this is not public information you want everyone to know. Being able to keep secrets is called privacy.

If you go out to eat with a group, the person making dinner needs to know if someone in the group has a nut allergy. But the chef doesn’t necessarily need to know who is the one who can't have their famous peanut sauce. They just need to know that of the people at dinner, someone can't have nuts.

Now imagine the person or people with the nut allergy leaves the table. For dessert, the table lets the waiter know that Pad Thai is now ok to serve. Oops! Now the waiter knows exactly who was allergic. So much for privacy.

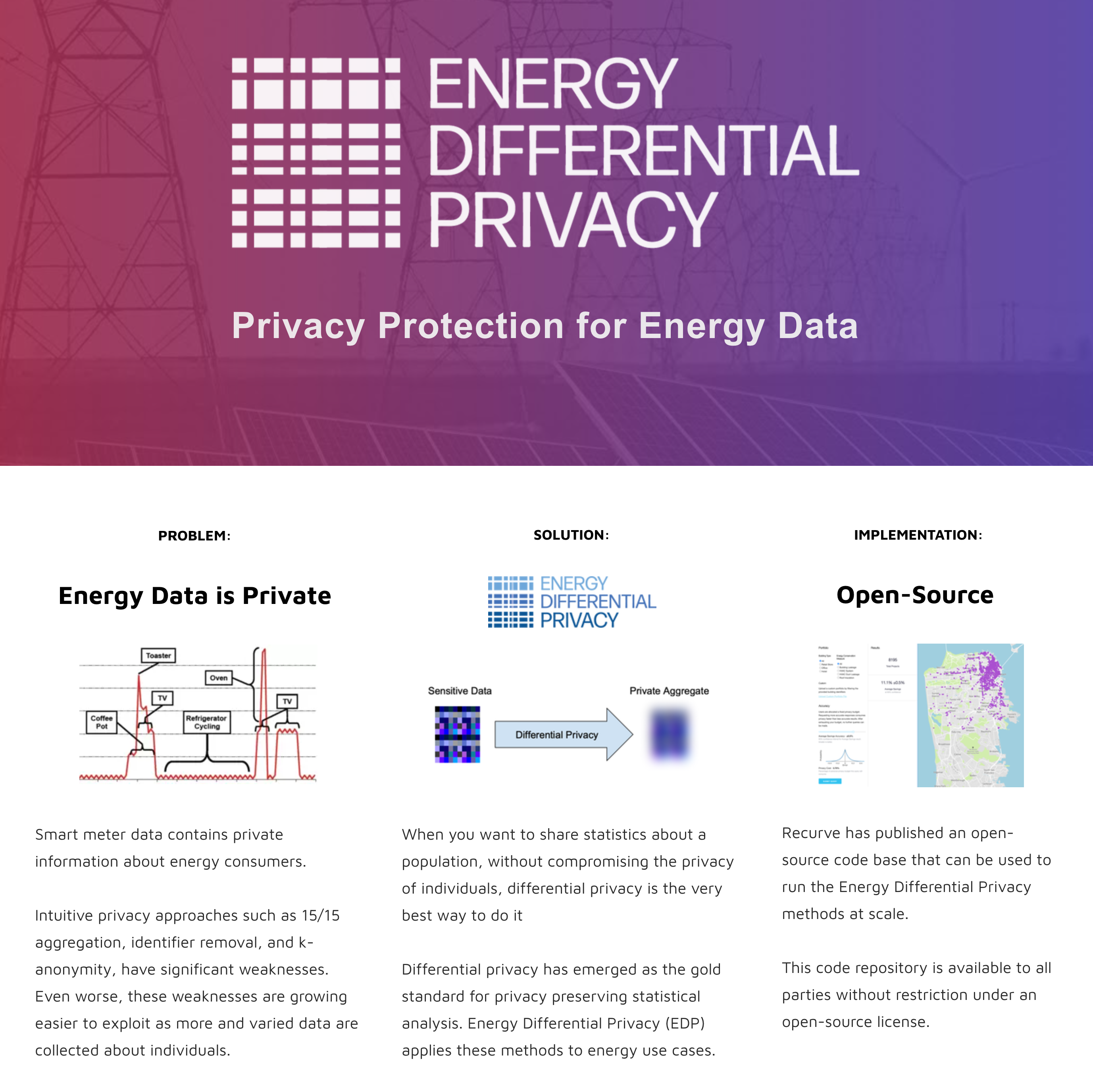

Differential privacy works to protect against these kinds of scenarios by adding noise to statistics in order to blur the contribution of individuals, while preserving the information about the group.

In the example above, this means that some known percentage of the time, the chef receives reports of nut allergies that were just made up by the server. So the chef is never sure if it's a real allergy being reported, or just a practical joke (aka random noise).

Imagine it like pixelating an image. With a small amount of noise added, you could still tell what the image is, but you might not necessarily be able to identify individual details about it.

Adding more noise makes data more anonymous, but also less useful. Determining the appropriate trade-off for this parameter depends on the use-case. For example, Google sharing individuals’ location data is about as sensitive as it comes, but a carbon derivative or savings from an efficiency project is already abstracted and carries much less risk.

For a further general introduction, this video covers some of the very basics:

The noise added by a differential privacy mechanism guarantees that an attacker with perfect information about a database can only increase their knowledge about whether an individual’s data was included in the dataset up to a threshold ε. Mathematically, ε is defined as the ratio of probabilities between two databases that differ by a single element. This ratio must hold for all possible outputs and all possible pairs of neighboring databases.

A fundamental concept in differential privacy is the “privacy budget.” Every anonymization technique —from k-anonymity, to the 15/15 rule, to differential privacy—reveals some amount of information about the individuals in the dataset. For example, if one could find out the consumption information for 14 of the 15 individuals in a dataset anonymized by the 15/15 rule, it would be possible to deduce that final participant’s energy usage.

Given these vulnerabilities in existing privacy practices, the guarantees offered by differential privacy mechanisms have several highly desirable properties.

- They are composable: it is possible to compute an exact bound on privacy loss from multiple statistical releases. Multiple statistical releases gracefully degrade privacy guarantees instead of catastrophically failing (non-binary outcomes are possible).

- The privacy protections are not dependent on an attacker’s existing level of knowledge.

- Finally, the outputs of a differentially private mechanism are robust to post-processing, not compromising privacy no matter how much additional computation is performed.

So those are the basics of Differential Privacy.

But as usual, the difference between the basic idea and real application is vast. Dealing with energy use data carries its own specific privacy challenges we have had to adjust for, such as the recognizable patterns in building types, occupancy, and weather.

Recurve has been working with leading differential privacy researchers to define a range of real-world use-cases and methods that enable us to keep the baby and throw out the bathwater for each. But there truly is no one size fits all differential privacy solution.

As usual, all of our methods are open-source and available for collaboration. We would like to thank our friends at the City of San Francisco, NREL, and DOE for delving into this topic with us.

Stay tuned for our next blog, which delves into how differential privacy is being used to unlock the value in data while providing unparalleled protection for the privacy of customers.

Read the Whole Series:

Part 1: Current Energy Data Privacy Methods Aren't Sufficient. We Have the Tools To Do Better.

Part 2: What Exactly is Energy Differential Privacy™? A Quick Tour of How it Works.

Part 3: Real World Use-Cases for Energy Differential Privacy™: Using EDP to Track COVID Impacts.

Learn more about Energy Differential Privacy™ on the the EDP website.

.png)

.png)

.png)